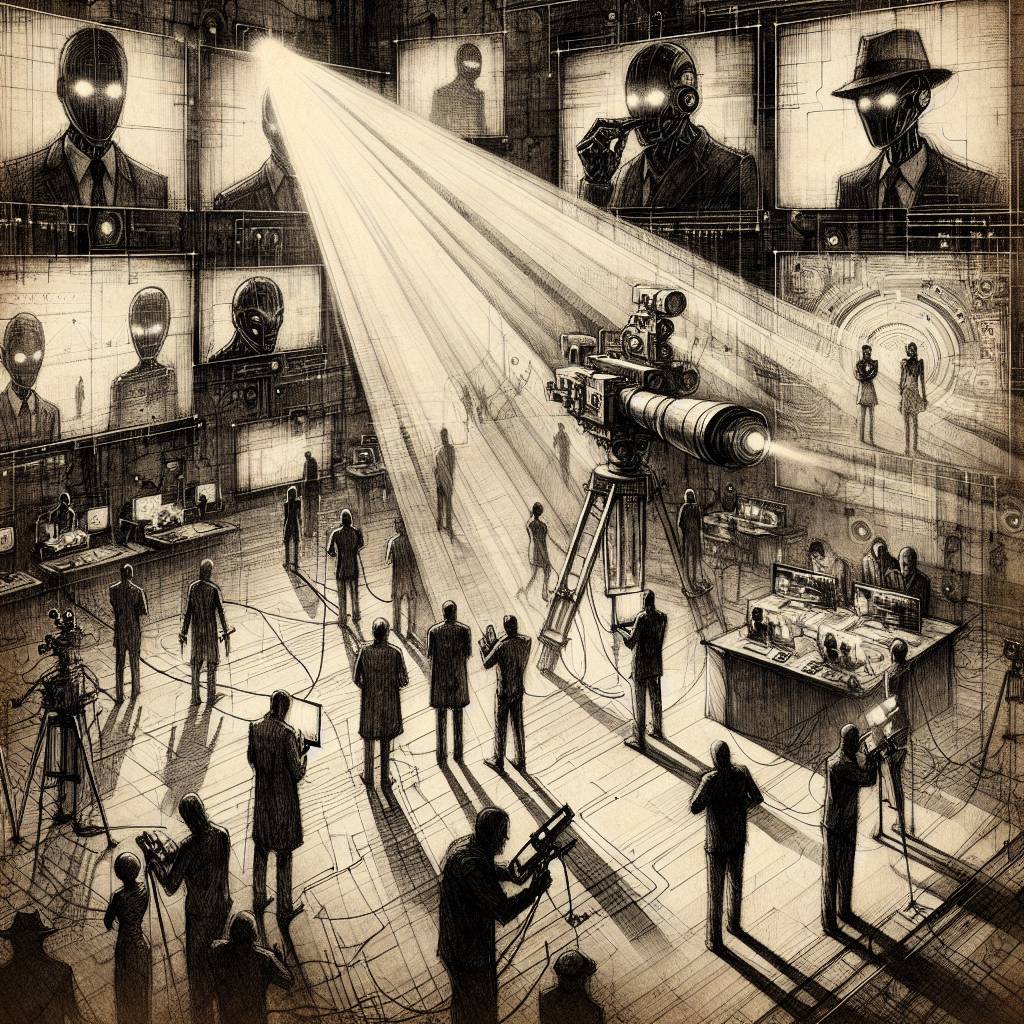

North Korean Shenanigans: How Kimsuky Used ChatGPT for Espionage Hijinks

North Korean spies allegedly used ChatGPT to craft a fake military ID in a South Korean espionage plot. Despite OpenAI’s safeguards, the Kimsuky group reportedly bypassed them using clever prompt-engineering. The deepfake ID was then used in spear-phishing emails, showcasing an alarming yet comedic twist in espionage tactics.

Hot Take:

Why stop at deepfaking IDs when you can deepfake an entire military parade? North Korea’s Kimsuky group has clearly decided that if you can’t beat ’em, fake ’em! Who knows, maybe next they’ll deepfake a peace treaty. The creativity is impressive, but this is one art project that might get you a little more than an F for “Foul Play.”

Key Points:

– North Korean cybercriminals used ChatGPT to create a fake military ID for espionage.

– A group called Kimsuky executed the spear-phishing attack using deepfake technology.

– They tricked ChatGPT into producing the ID by framing it as a “sample design.”

– The attack specifically targeted a South Korean defense-related institution.

– OpenAI has been cracking down on misuse of their models, including attempts by North Korean actors.