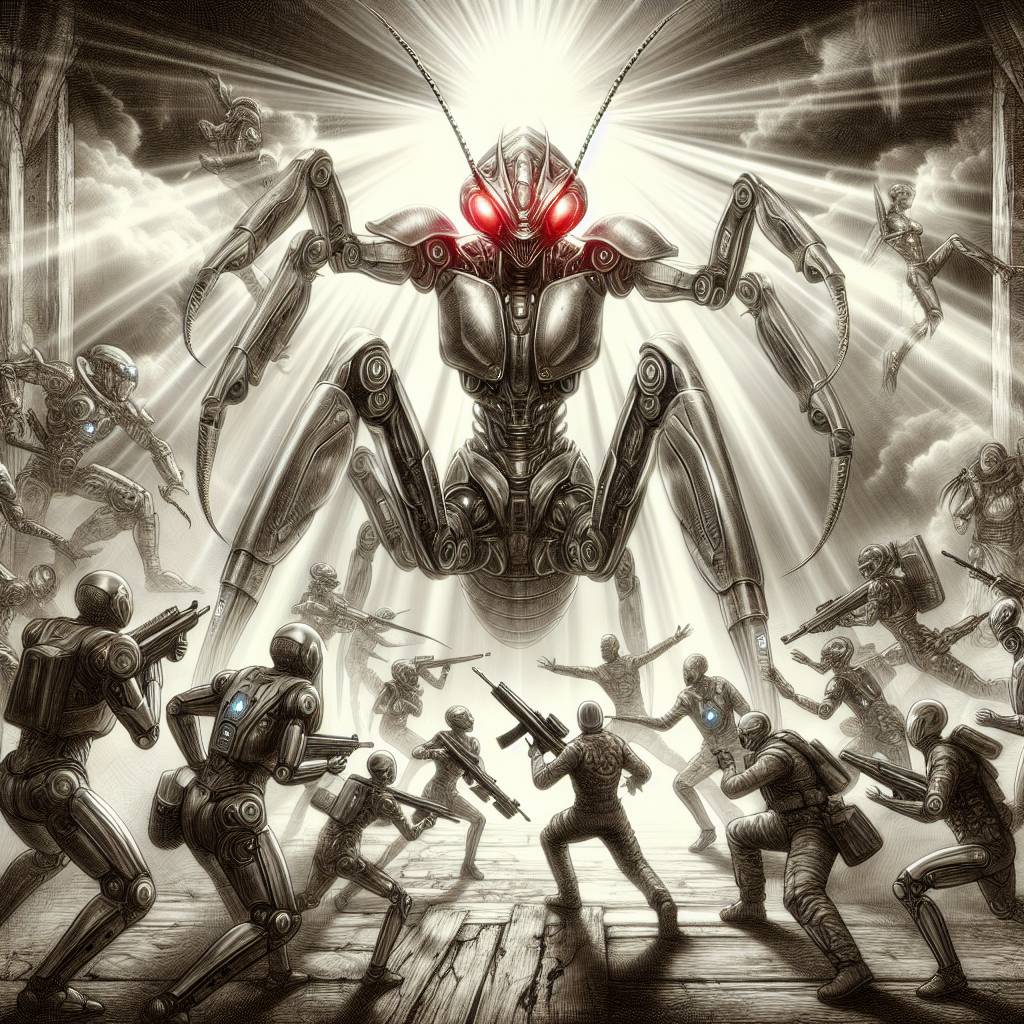

Mantis Strikes Back: How Cyber Defenders Turn AI Attackers into Digital Prey

Cyberattackers using large-language models better watch out: Mantis, a defensive AI system from George Mason University, is here to turn the tables. By cleverly using prompt-injection attacks, Mantis can trick these malicious LLMs into following its lead. It’s a digital game of cat and mouse, but with AIs as the prey.

Hot Take:

Who knew that AI-vs-AI could be the newest action-packed thriller? With Mantis playing the role of the digital double-agent, it’s basically Cybersecurity’s answer to James Bond. Move over, 007, there’s a new hero in town, and it’s armed with prompt injections instead of a Walther PPK.

Key Points:

- Mantis uses deceptive techniques to thwart AI-driven cyberattacks by sending back prompt-injection payloads.

- Designed by researchers at George Mason University, Mantis exploits the “greedy approach” of LLMs.

- Prompt injections can mislead AI attackers into taking unintended actions, like opening a reverse shell.

- The system operates autonomously to disrupt and counteract LLM-based attacks.

- Prompt-injection vulnerabilities remain a significant challenge for securing AI systems.

Already a member? Log in here