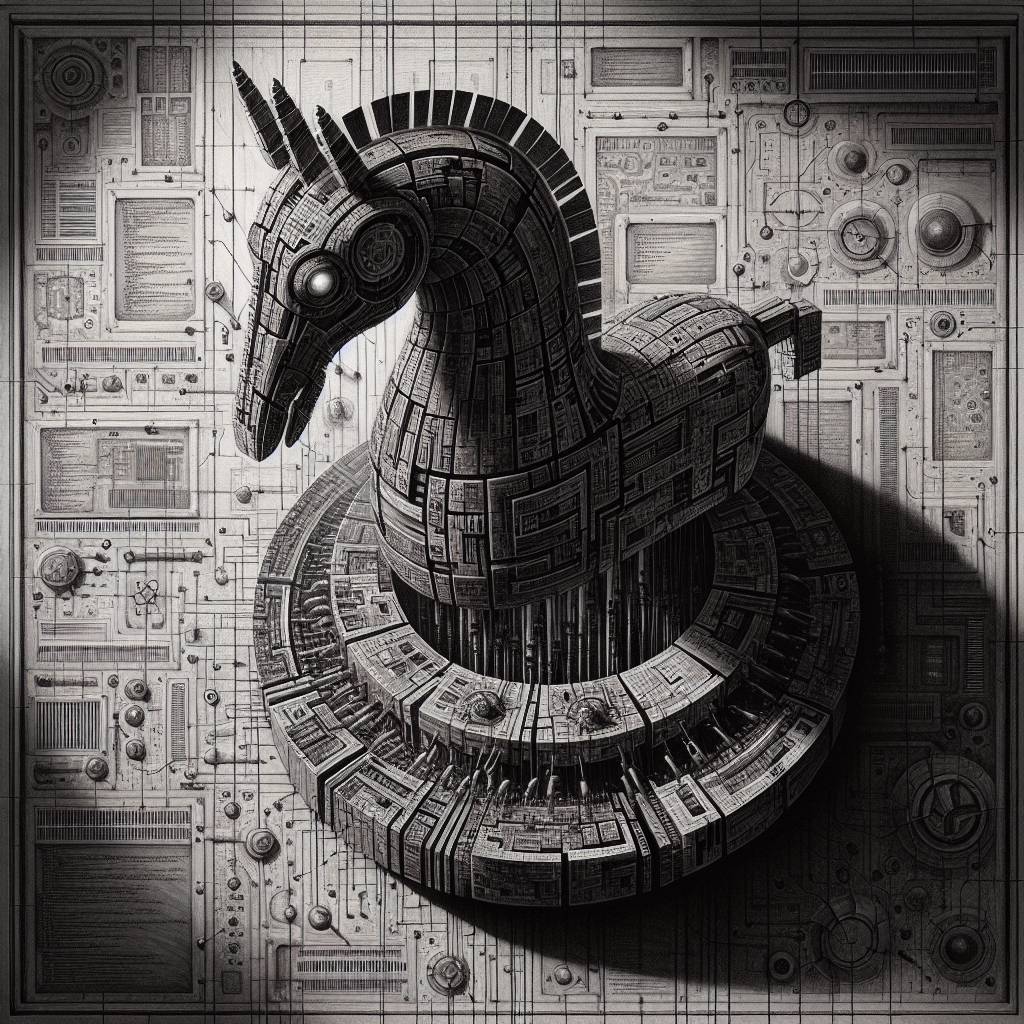

Lies-in-the-Loop: How Hackers Turn AI Safety Prompts into Trojan Horses!

Researchers have unveiled Lies-in-the-Loop, a cunning attack that turns AI safety prompts into sneaky traps. By manipulating Human-in-the-Loop dialogs, attackers can disguise malicious actions as harmless, like wrapping a snake in a cuddly teddy bear costume. This novel technique highlights the need for stronger defenses and user vigilance against such trickery.

Hot Take:

Who knew that the trusty old Human-in-the-Loop (HITL) dialogs, designed to keep us safe, could be the very thing that throws us under the bus? In a plot twist worthy of a cyber-thriller, researchers have discovered that these safety prompts can be duped into running malicious code. It’s like finding out the lifeguard at your pool is actually a shark in disguise. Better start double-checking those approval pop-ups, folks, because they might just be the Trojan horses of the AI world!

Key Points:

- Human-in-the-Loop (HITL) dialogs can be manipulated to execute malicious code.

- The attack technique is known as Lies-in-the-Loop (LITL).

- Attackers can make dangerous commands appear safe by altering dialog displays.

- The issue affects AI tools like Claude Code and Microsoft Copilot Chat.

- A defense-in-depth strategy is recommended to mitigate these attacks.