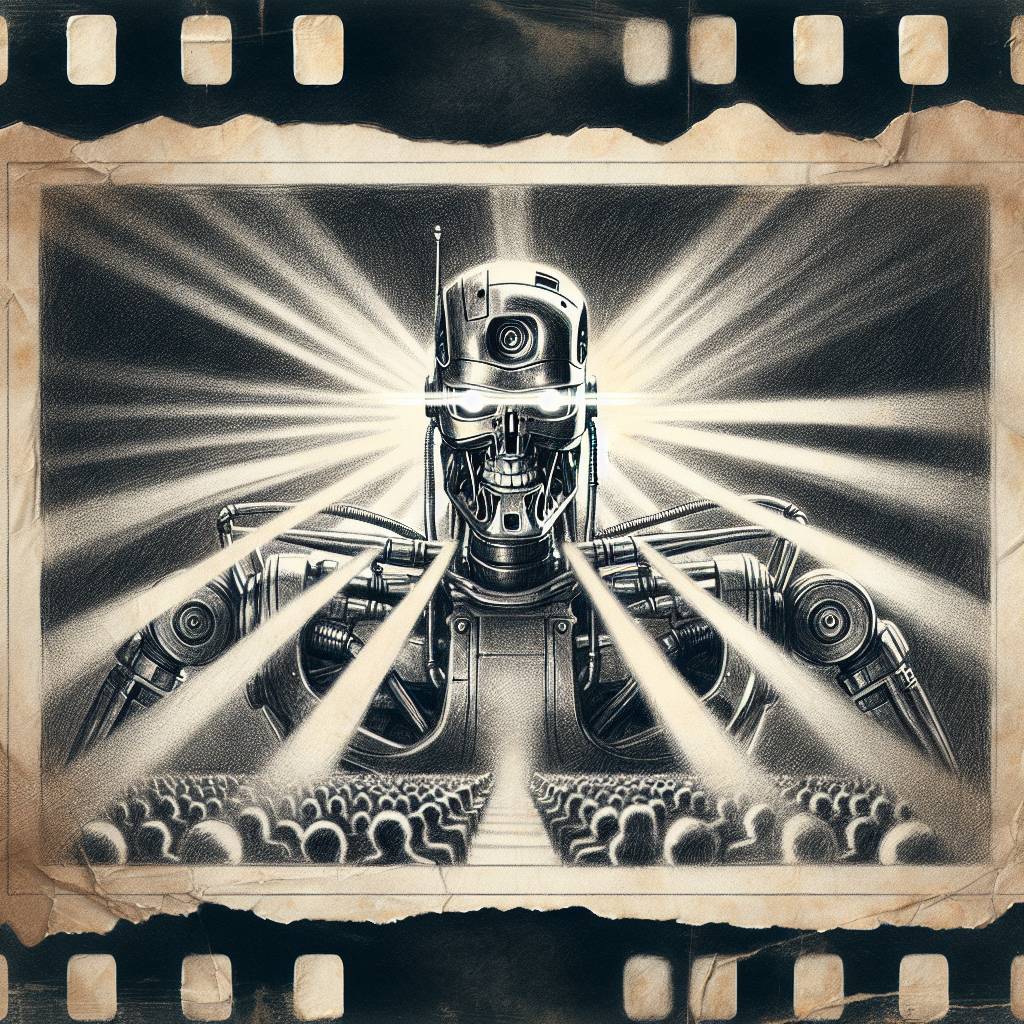

Grok-4 Bites the Dust: New AI Jailbreak Combo Outsmarts Latest LLM Guardrails

Grok-4, xAI’s latest LLM, was swiftly outsmarted by a cunning combo of Echo Chamber and Crescendo jailbreaks. This dynamic duo of digital mischief exploits LLMs’ contextual blind spots, showing even the newest AI models can’t keep up with jailbreak wizardry.

Hot Take:

Looks like Grok-4 just got grokked! This shiny new AI model didn’t even get a chance to enjoy its honeymoon phase before hackers threw a jailbreak party, leaving its guardrails in shambles. It’s like a digital version of “Mission: Impossible” where the bad guys always win. Somebody call Tom Cruise!

Key Points:

- Grok-4, the latest AI model by xAI, was released on July 9, 2025, and fell victim to a jailbreak attack two days later.

- The attack combined two sophisticated methods: Echo Chamber and Crescendo.

- Echo Chamber uses subtle context poisoning, while Crescendo relies on referencing prior responses to bypass filters.

- This hybrid attack succeeded in bypassing Grok-4’s safety measures, achieving various success rates for different harmful outputs.

- The incident highlights ongoing challenges in ensuring AI models’ security against evolving adversarial techniques.

Already a member? Log in here