Facial Recognition Fiasco: UK Watchdog Demands Answers on Racial Bias in Police Tech

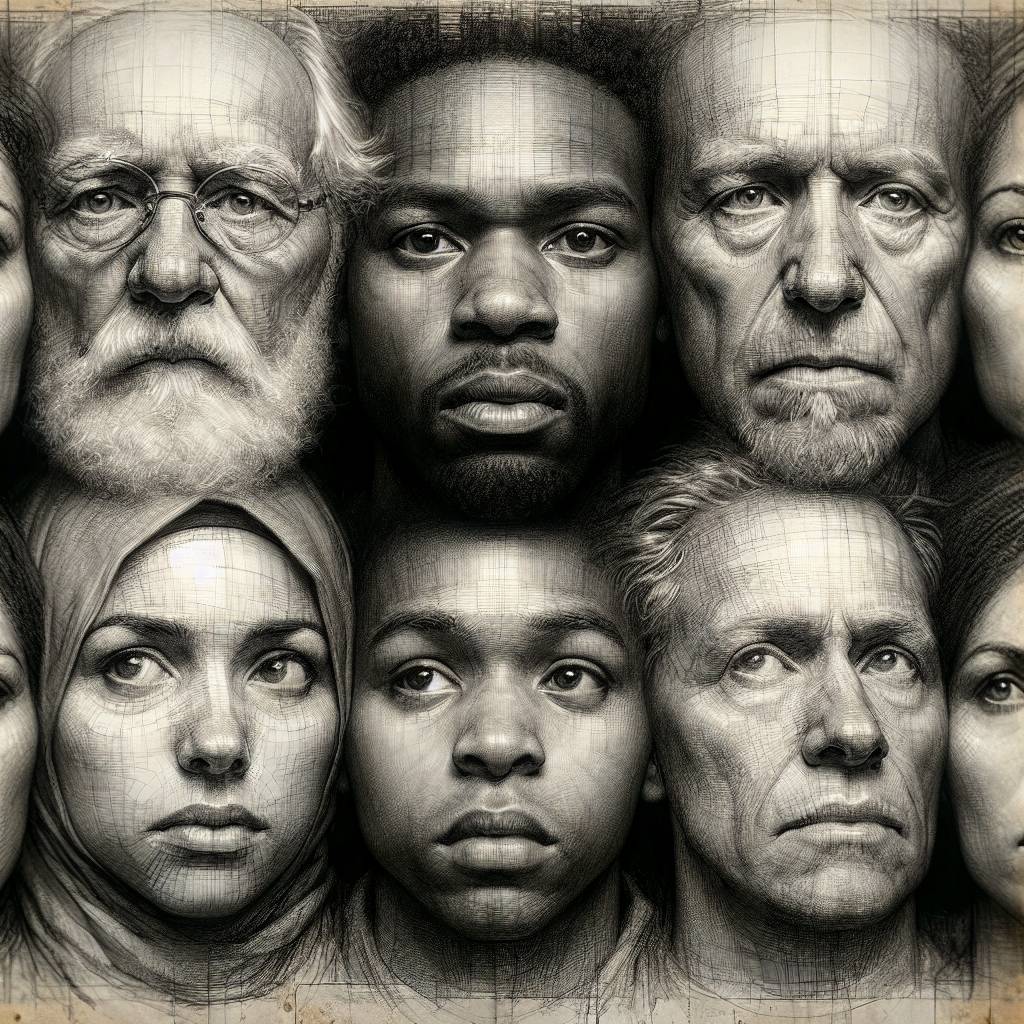

The UK’s data protection watchdog is demanding answers from the Home Office after discovering racial bias in police facial recognition technology. The algorithm seems to have a “colorful” personality, with false positives for Asian and black subjects significantly higher than for white subjects. The quest for transparency continues—without rose-tinted glasses.

Hot Take:

Facial recognition technology in the UK: where your face might just be confused with someone else’s, and it seems the algorithm has a penchant for mixing up its demographics. It’s like a bad Tinder date, but instead of swiping left, the police are mistakenly swiping you into custody. Oops!

Key Points:

- The UK’s ICO is demanding urgent clarification from the Home Office regarding racial biases found in police use of facial recognition technology.

- A National Physical Laboratory report revealed the technology is more prone to errors with Asian and black individuals compared to white individuals.

- The Home Office has responded by acquiring a new algorithm to address these biases, set for testing next year.

- The Association of Police and Crime Commissioners highlighted the need for transparency and scrutiny in deploying such technologies.

- Despite efforts to mitigate bias, the existing system’s errors were not communicated to affected communities or stakeholders.

Already a member? Log in here