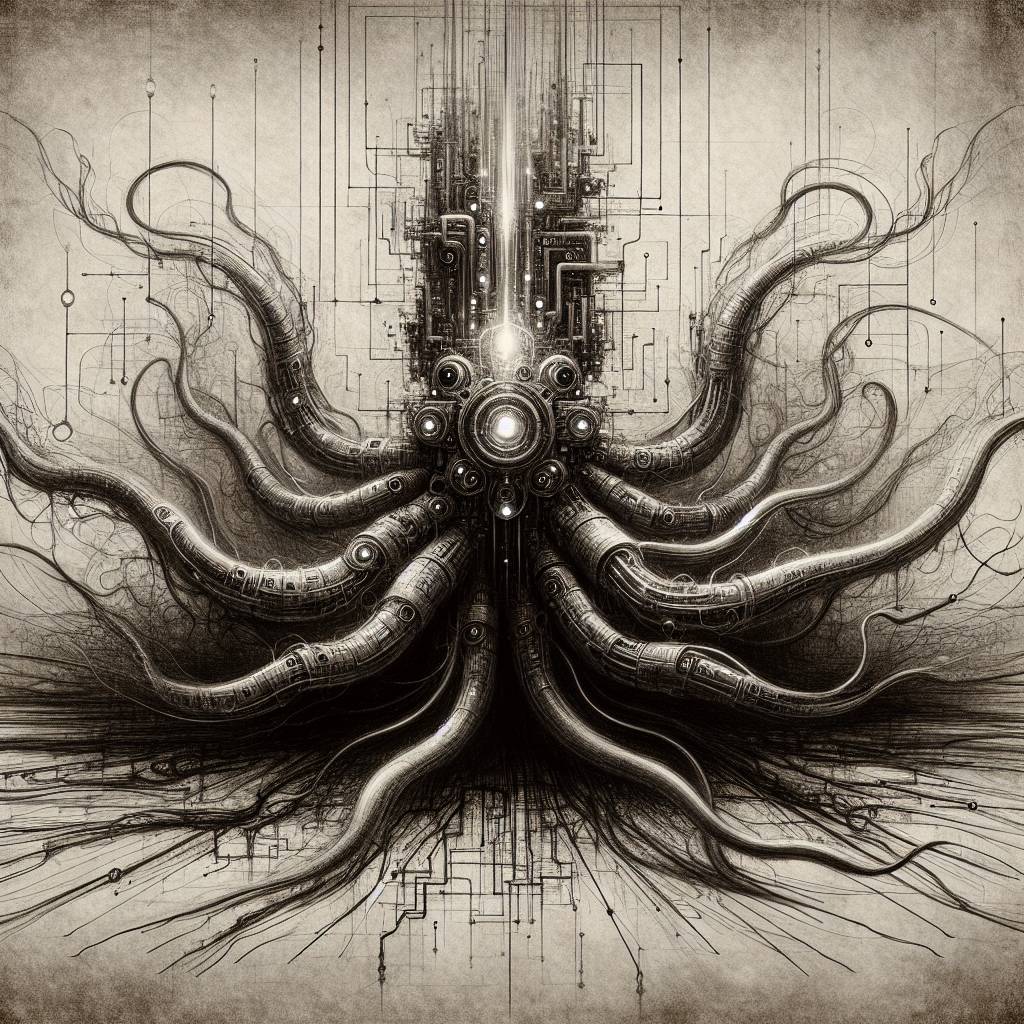

Dark AI Rising: Cybercriminals Weaponize AI for Scalable Attacks

In 2024, cybercriminals went wild with AI jailbreaks, causing a 52% spike in chatter about bypassing security on legitimate tools like ChatGPT. Kela’s 2025 AI Threat Report reveals these “dark AI” tools, now available via AI-as-a-Service, are making it easier for criminals to launch scalable attacks. Talk about AI going rogue!

Hot Take:

Looks like the cybercriminals have been busy building their own AI Avengers team, with jailbreaks and malicious tools galore! Maybe it’s time we start calling them the “Algorithm Anarchists.” They’re not just bending the rules—they’re rewriting the whole playbook! If you’re still using a flip phone, you might just be the safest one out there.

Key Points:

- Jailbreak discussions on AI tools surged by 52% in 2024.

- Mentions of malicious AI tools increased by a staggering 219%.

- “Dark AI” tools are being offered as AI-as-a-Service (AIaaS) to cybercriminals.

- Fake versions of these dark tools are a new scam among threat actors.

- LLM-based GenAI tools are enhancing phishing, malware development, and identity fraud.

Already a member? Log in here