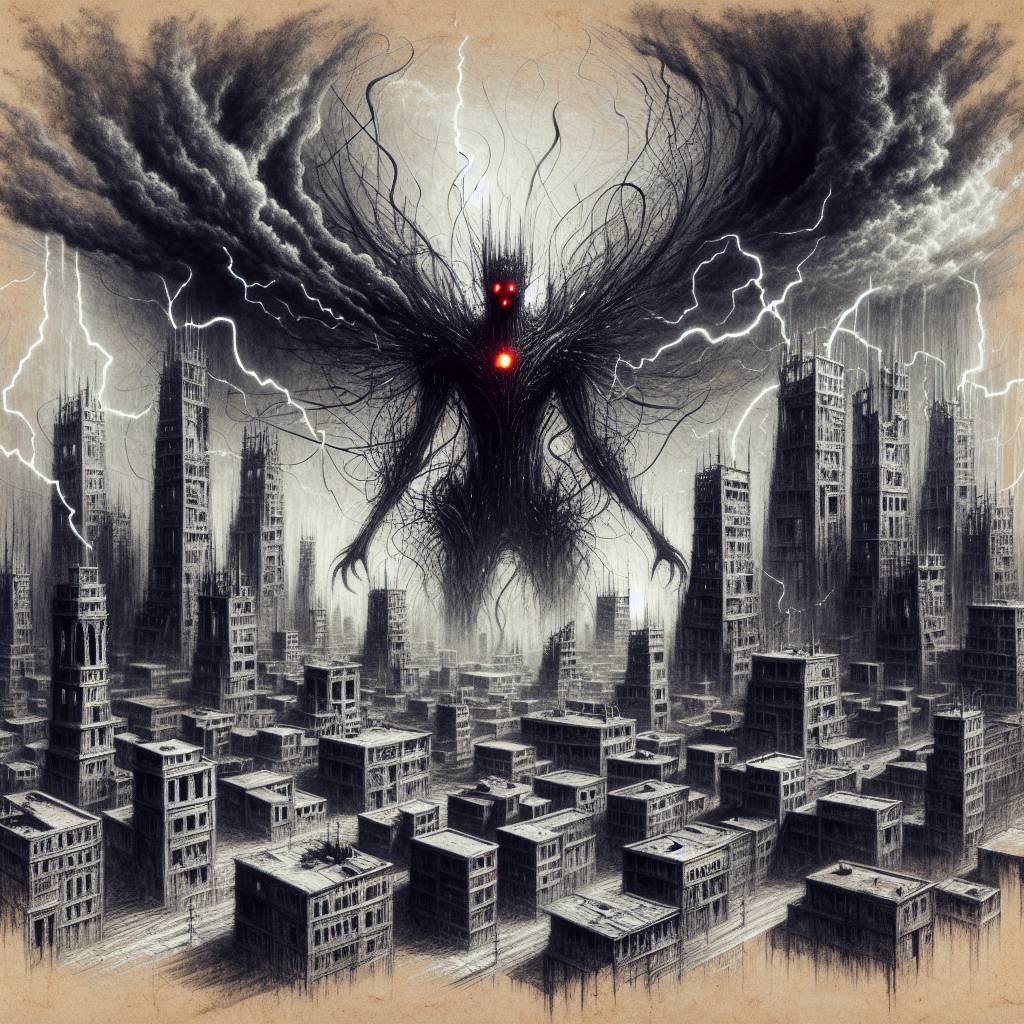

Cybercriminals Unleash Rogue AI: The Dark Side of Language Models

Cybercriminals are turning Large Language Models (LLMs) into their new partners in crime, exploiting these AI tools for more sophisticated attacks. From crafting malware to generating phishing emails, these digital miscreants are giving “thinking outside the box” a whole new meaning—just not the legal kind.

Hot Take:

Oh, how the mighty have fallen! Large Language Models (LLMs), once the shining knights of AI innovation, are now being corrupted by cybercriminals. It’s like watching your favorite superhero turn into a villain, except with more phishing emails and less dramatic capes. Talos’s latest research paints a picture of a not-so-distant future where AI is not just a tool for good, but also a secret weapon in the cyber underworld’s arsenal. Lock up your algorithms, folks, because it’s getting wild out there!

Key Points:

- Cybercriminals are manipulating LLMs for sophisticated attacks.

- Three methods: Uncensored LLMs, Custom-Built Criminal LLMs, Jailbreaking Legitimate LLMs.

- FraudGPT and other malicious models are being sold on the dark web.

- LLMs are used for programming malware, phishing, and criminal research.

- Even LLMs themselves are targeted with backdoors and data poisoning.