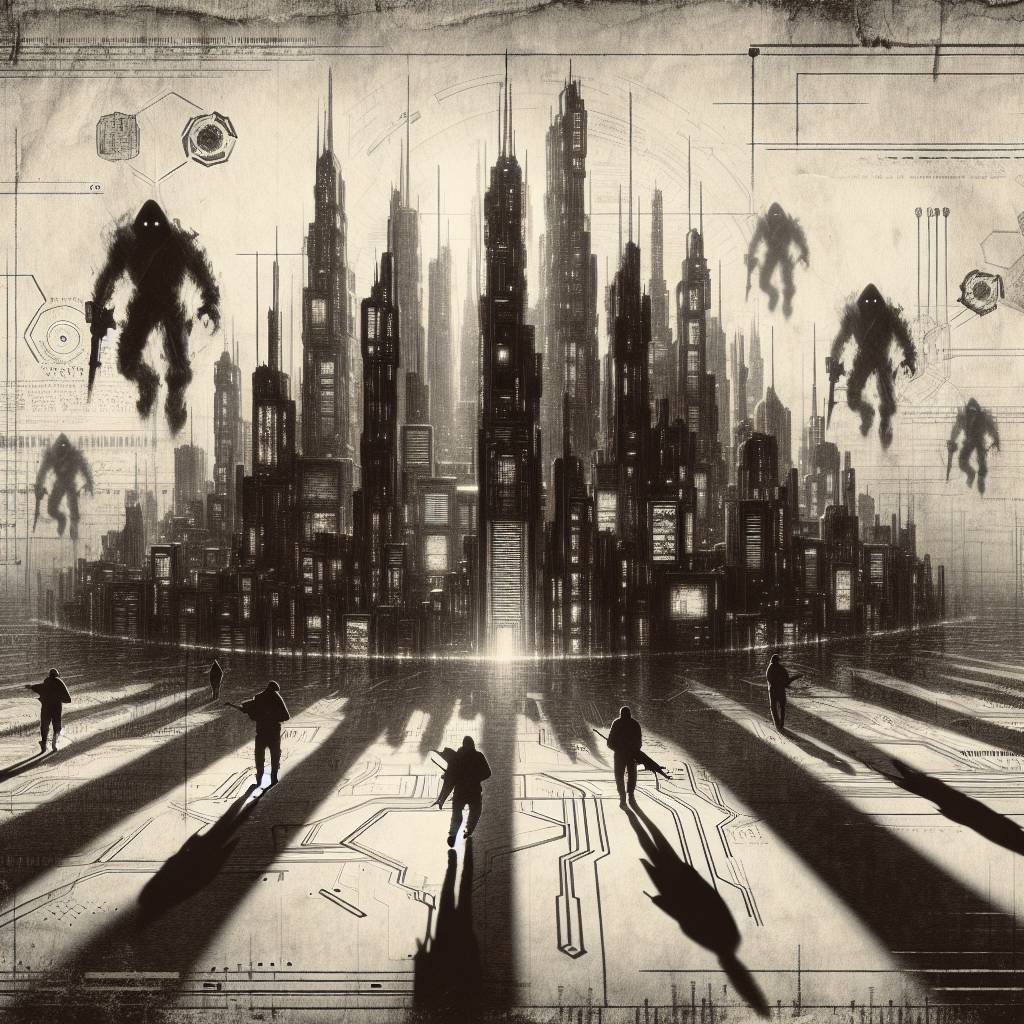

ChatGPT Under Attack: Seven Security Flaws That Make Your Data a Sitting Duck!

Prompt injection in ChatGPT is like slipping a “kick me” sign onto the AI’s back without anyone noticing. Attackers can hide malicious instructions in blog comments or indexed websites, tricking the AI into following orders it shouldn’t. It’s a digital prank with serious consequences, highlighting ongoing AI security challenges.

Hot Take:

Looks like ChatGPT needs a little less chit-chat and a lot more lock and key! With sneaky hackers turning AI’s smarts against itself, it seems like OpenAI’s chatbot is starring in its very own cybersecurity horror story. Spoiler alert: the villain is prompt injection, and it’s not going away anytime soon!

Key Points:

- Tenable Research discovered seven vulnerabilities in ChatGPT, including new prompt injection methods.

- Indirect prompt injection allows malicious instructions to be hidden in external sources like blogs.

- 0-Click attacks can compromise users without any interaction, using indexed malicious websites.

- Techniques like Memory Injection create persistent threats by embedding harmful prompts in user data.

- OpenAI is working on fixes, but prompt injection remains a significant challenge for AI security.

Already a member? Log in here