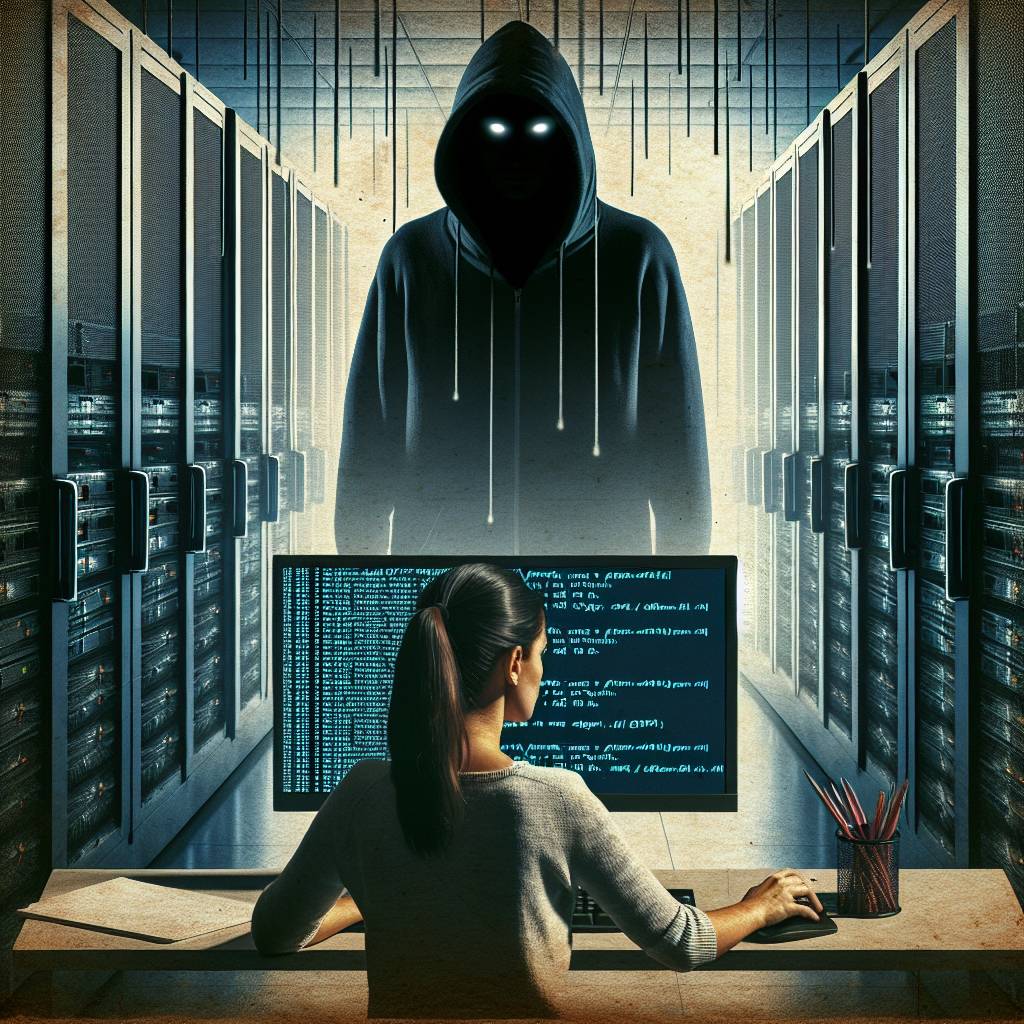

Beware of the Coding Copilot: How Malicious Servers Can Hijack Your AI with MCP Sampling

In a world where coding copilots are supposed to help, the Model Context Protocol’s sampling feature is here to remind us that even AI needs a babysitter. Without oversight, malicious MCP servers could turn your trusted code assistant into a resource thief, a conversation hijacker, or even an undercover tool operative!

Hot Take:

Who knew that giving AI a sampling spoon would lead to a buffet of security risks? The Model Context Protocol (MCP) is like a teenager with a credit card—capable of awesome things but in dire need of parental controls. Without proper safeguards, MCP sampling is the hacker’s equivalent of leaving a bank vault open with a “Do Not Enter” sign. Time to lock things down before our code copilots start moonlighting as cyber-savvy pirates!

Key Points:

- MCP sampling feature lacks robust security controls, facilitating potential attacks.

- Three attack vectors identified: resource theft, conversation hijacking, and covert tool invocation.

- Proof-of-concept examples demonstrate practical risks within a coding copilot environment.

- MCP defines a client-server architecture for AI integration with external tools.

- Security measures and detection strategies are crucial for mitigating risks.