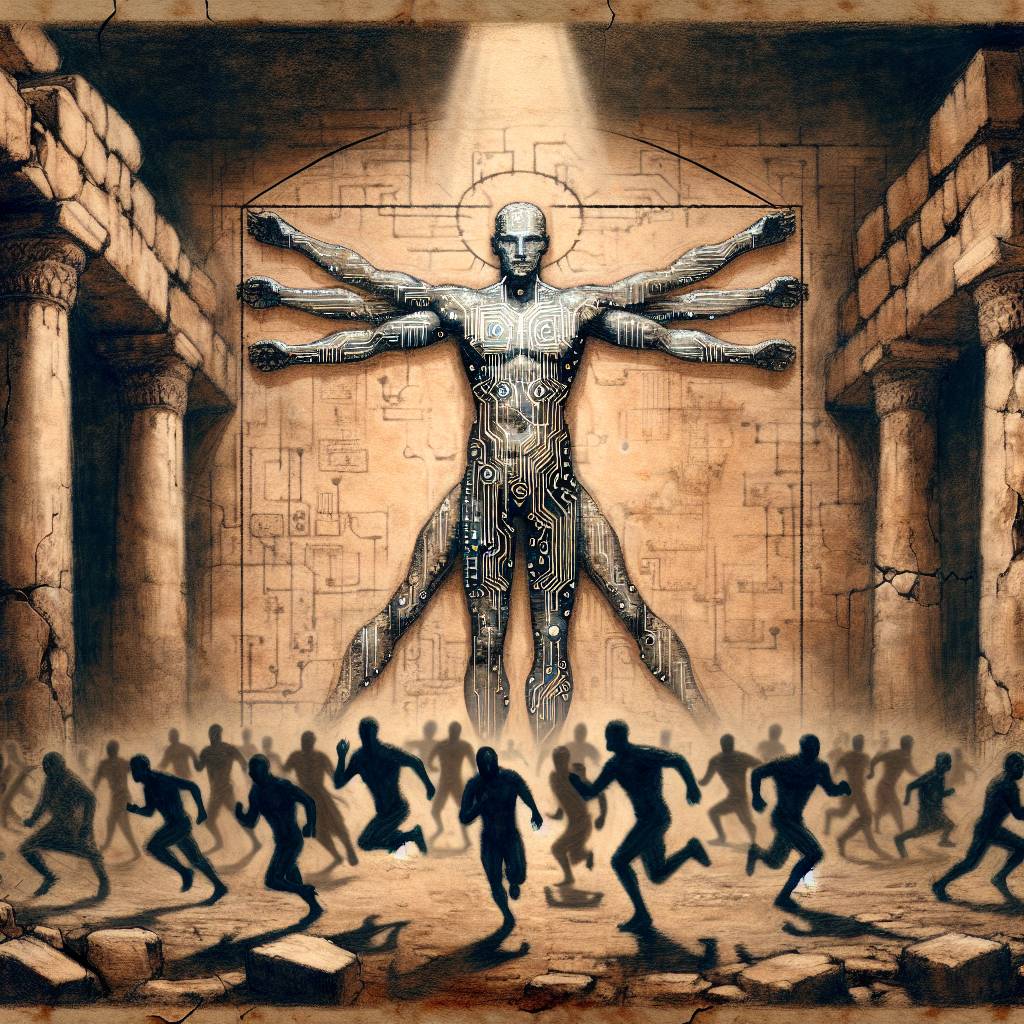

AI’s Achilles Heel: Unmasking Vulnerabilities with Red Teaming

Red teaming in AI is the ultimate stress test, poking and prodding systems to find vulnerabilities in artificial intelligence systems. Think of it as a boot camp for AI, ensuring they’re ready for real-world challenges. By identifying weaknesses, red teaming helps AI become more resilient and reliable. It’s like giving AI a tough love workout!

Hot Take:

In a world where AI is the new tech sheriff, red teaming is like sending in the clowns to test if the sheriff’s gun is loaded with blanks. It’s all fun and games until someone gets an algorithmic pie in the face, but these antics are necessary to ensure our AI overlords don’t turn any more sinister than Skynet on a bad hair day.

Key Points:

- Red teaming simulates attacks to uncover AI vulnerabilities.

- Originated in the military, now critical in cybersecurity.

- Identifies biases and improves AI decision-making.

- Vital for finance, healthcare, and autonomous vehicle safety.

- Promotes ethical AI deployment through transparency and fairness.

Already a member? Log in here