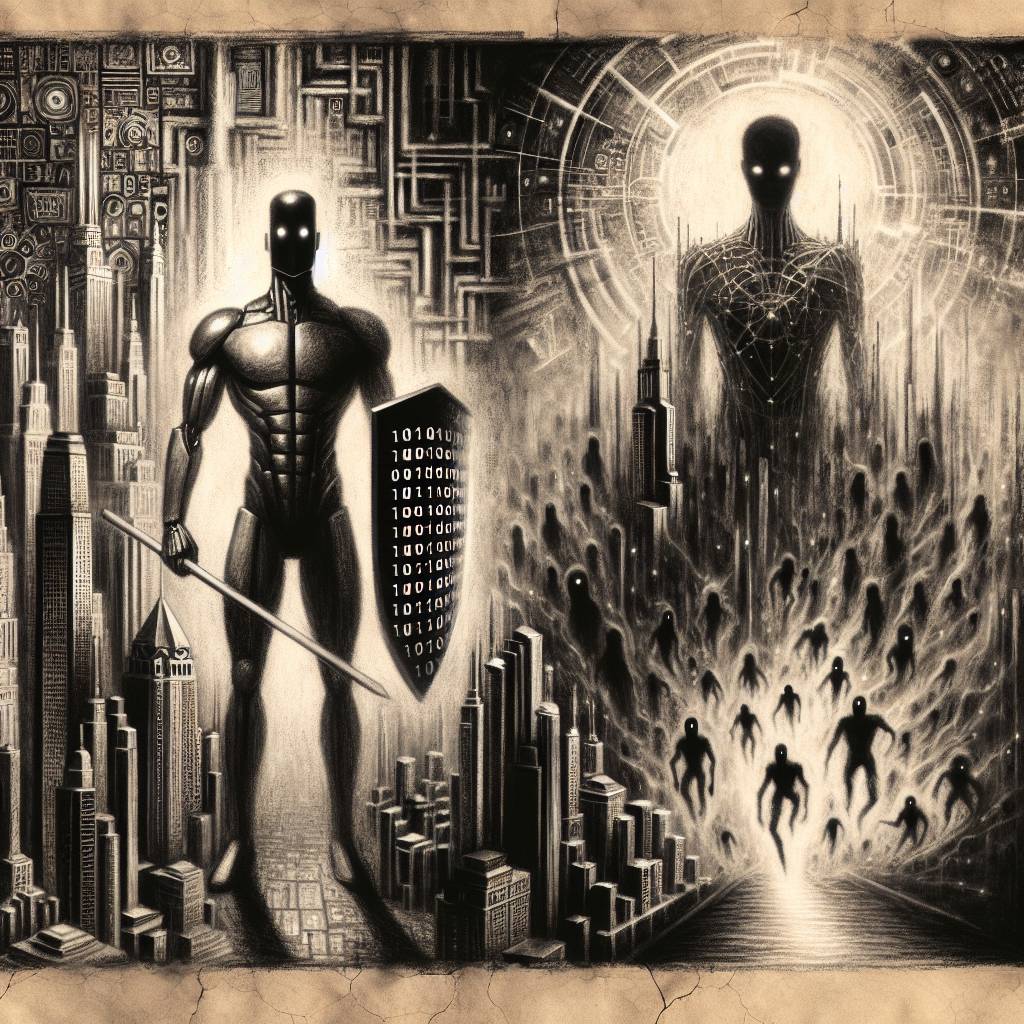

AIgatekeeper: The Heroic Task of Keeping AI From Going Rogue

Zico Kolter’s role at OpenAI is no joke; he leads a panel that can halt new AI systems if deemed unsafe. Think of him as the tech world’s safety net, ensuring AI doesn’t go from helpful assistant to supervillain sidekick. His mission? Keep AI in check, and maybe save humanity while he’s at it.

Hot Take:

It seems like OpenAI has appointed a real-life superhero team led by Professor Zico Kolter, whose mission is to save humanity from rogue AI. Forget Avengers; we’ve got the “AI Defenders” now! With ChatGPT on one side and potential doomsday scenarios on the other, Kolter’s panel might just be the last line of defense between our world and an AI apocalypse. It’s like a sci-fi movie, only with more spreadsheets and board meetings!

Key Points:

- Zico Kolter leads a 4-member safety panel at OpenAI to assess AI system safety.

- The committee can delay or halt AI releases if deemed unsafe.

- OpenAI’s restructuring includes a commitment to prioritize safety over profits.

- Kolter’s panel includes former US Army General Paul Nakasone.

- OpenAI faced criticism and legal challenges over AI safety concerns.

Already a member? Log in here