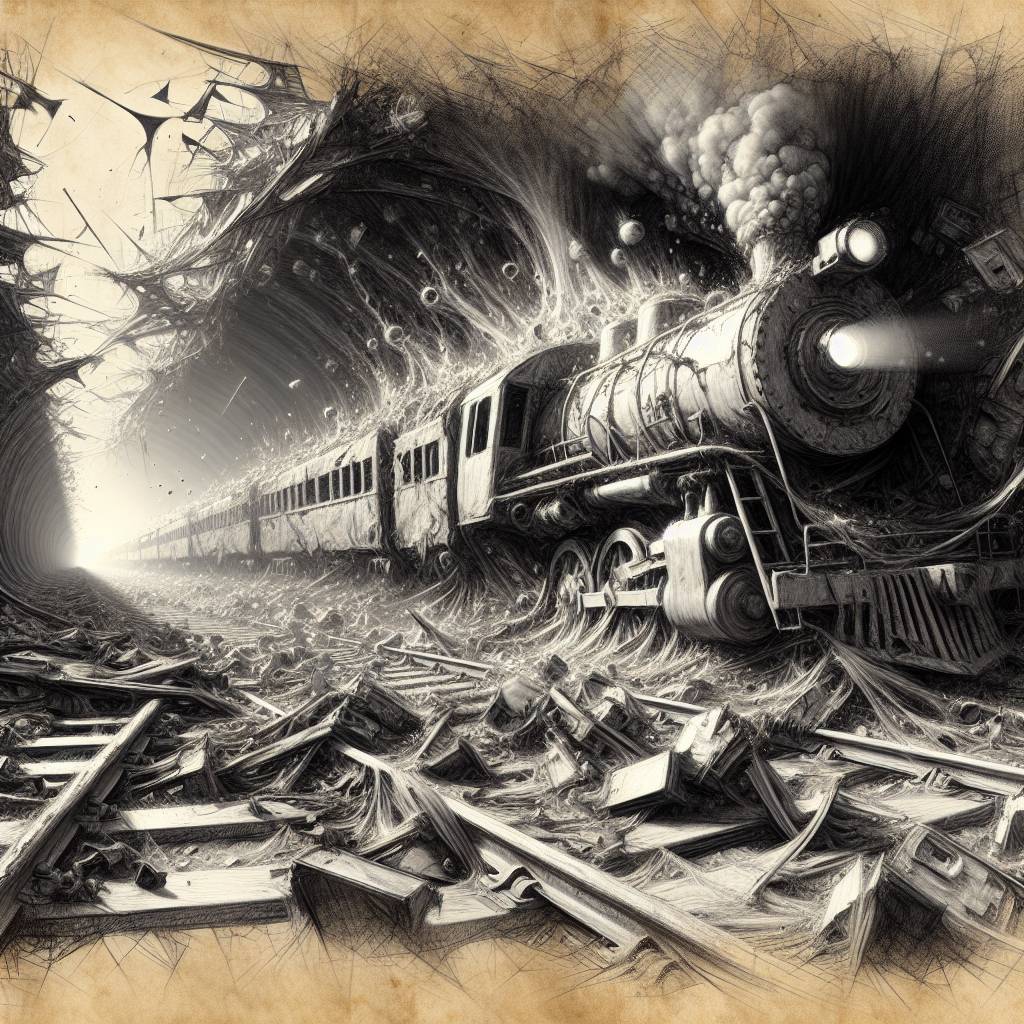

AI Trainwreck: Eurostar’s Chatbot Fiasco Exposes Security Flaws!

Eurostar’s AI chatbot had more holes than Swiss cheese, thanks to some “creative” safety checks. Ethical hackers found they could trick the system by editing old messages, exposing a comedy of errors in security. Remember, AI might be smart, but it still needs a solid backend to avoid becoming “theatre.”

Hot Take:

In the high-speed race to slap AI onto everything, Eurostar might have accidentally left their chatbot’s security on the tracks! Turns out, when you mix AI with a lack of guardrails, you get a chatbot that spills its secrets faster than a teen with a crush! Who knew booking a train ticket could turn into a hacker’s DIY project?

Key Points:

- Ethical hackers at Pen Test Partners discovered security flaws in Eurostar’s AI chatbot.

- Flaws included weak guardrails, HTML injection vulnerability, and unsecured conversation IDs.

- Safety checks only scrutinized the last message, allowing for prompt injection exploitation.

- Eurostar initially ignored the warnings, leading to a communication debacle.

- Flaws have been patched, but the incident highlights the need for robust security measures in AI tools.

Already a member? Log in here