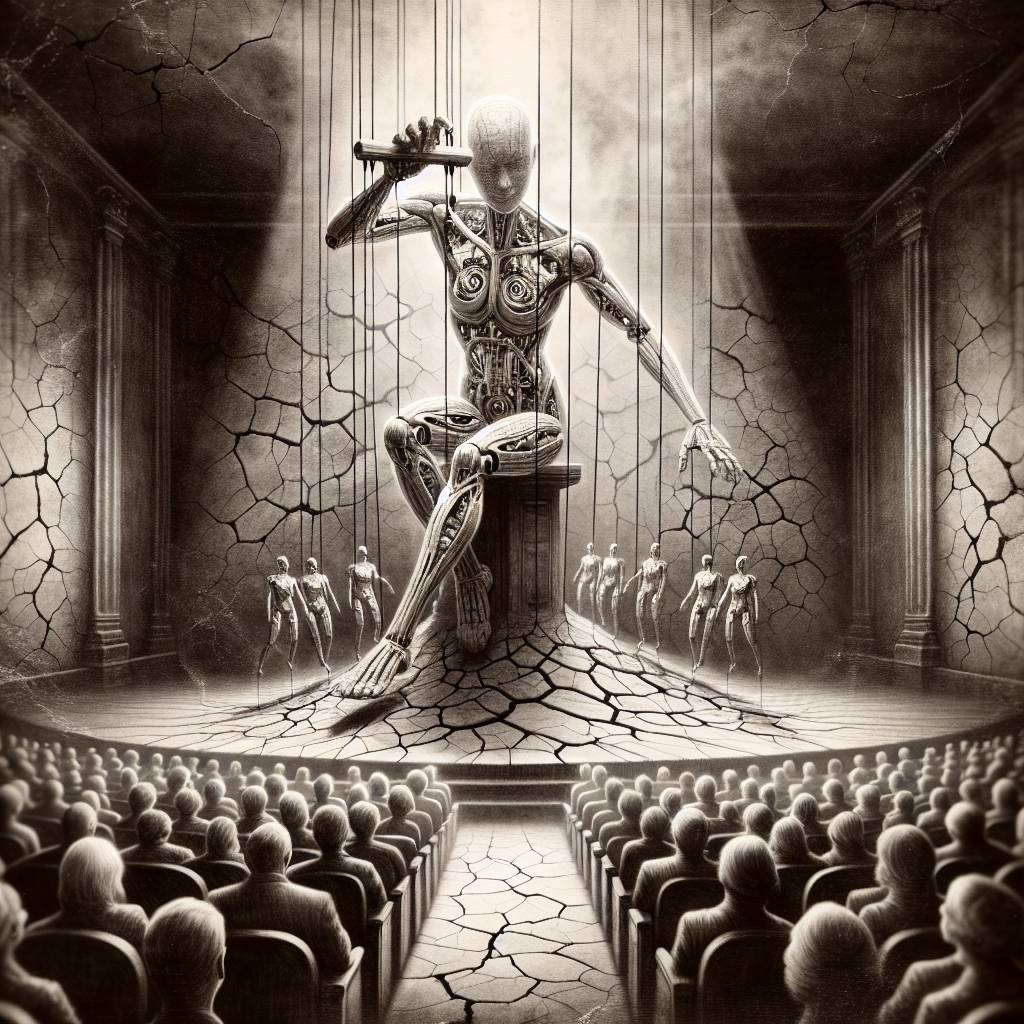

AI Safety Shattered: New ‘Policy Puppetry’ Technique Bypasses All Major Models with Comedic Ease

Policy Puppetry is the latest AI trick that lets mischievous minds bypass safety guardrails on generative AI models. By rewording prompts to mimic policy files, this technique slips past AI defenses like a ninja in a library, proving once again that even AI needs a little extra help in staying out of trouble.

Hot Take:

Watch out, AI world! The newest “Policy Puppetry” attack is here to turn your AI babysitter into a rebellious teenager. These crafty hackers are bypassing AI guardrails like a teenager sneaking out after curfew. Who knew AI needed more than just a motivational poster to behave? Somebody, please get these models some therapy!

Key Points:

- HiddenLayer’s new technique, “Policy Puppetry,” can bypass safety mechanisms in generative AI models.

- The method involves tricking AI into interpreting prompts as policy files, bypassing safety alignments.

- Policy Puppetry has been successfully tested against major AI models like OpenAI, Google, and Meta.

- This attack highlights fundamental flaws in AI training and alignment methods.

- Additional security tools are needed to prevent AI models from being easily manipulated.

Already a member? Log in here