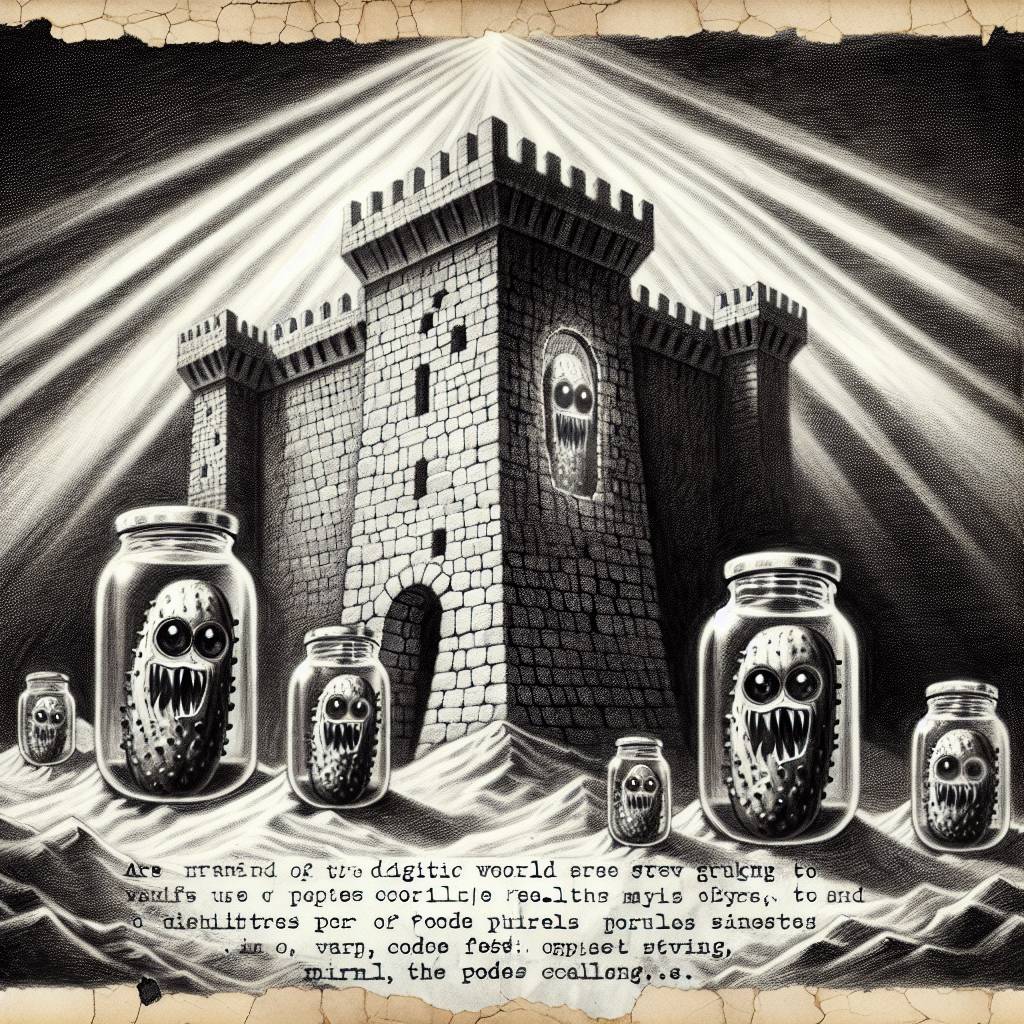

AI Repositories Under Siege: How Malicious Pickles Are Spoiling the Code Party

Malicious actors are turning data formats like Pickle into an insecure pickle jar, sneaking harmful code past security checks on Hugging Face. Companies relying on open source AI should not put all their eggs in one repository basket but instead implement robust internal security measures to avoid getting into a pickle themselves.

Hot Take:

Seems like the AI model playground is facing a little sandbox crisis! Hugging Face and its open-source buddies need to up their game before they get turned into a malicious code buffet. Let’s be real, if AI models come with a side of malware, it kind of kills the whole ‘smart’ vibe.

Key Points:

- Hackers are sneaking malicious AI projects past Hugging Face’s security checks using new techniques like “NullifAI”.

- Common data formats, like Pickle files, are being exploited to execute harmful code.

- Despite warnings, the Pickle format remains popular among data scientists.

- AI models’ licensing and alignment issues pose additional security risks.

- Security experts recommend adopting safer data formats like Safetensors.

Already a member? Log in here