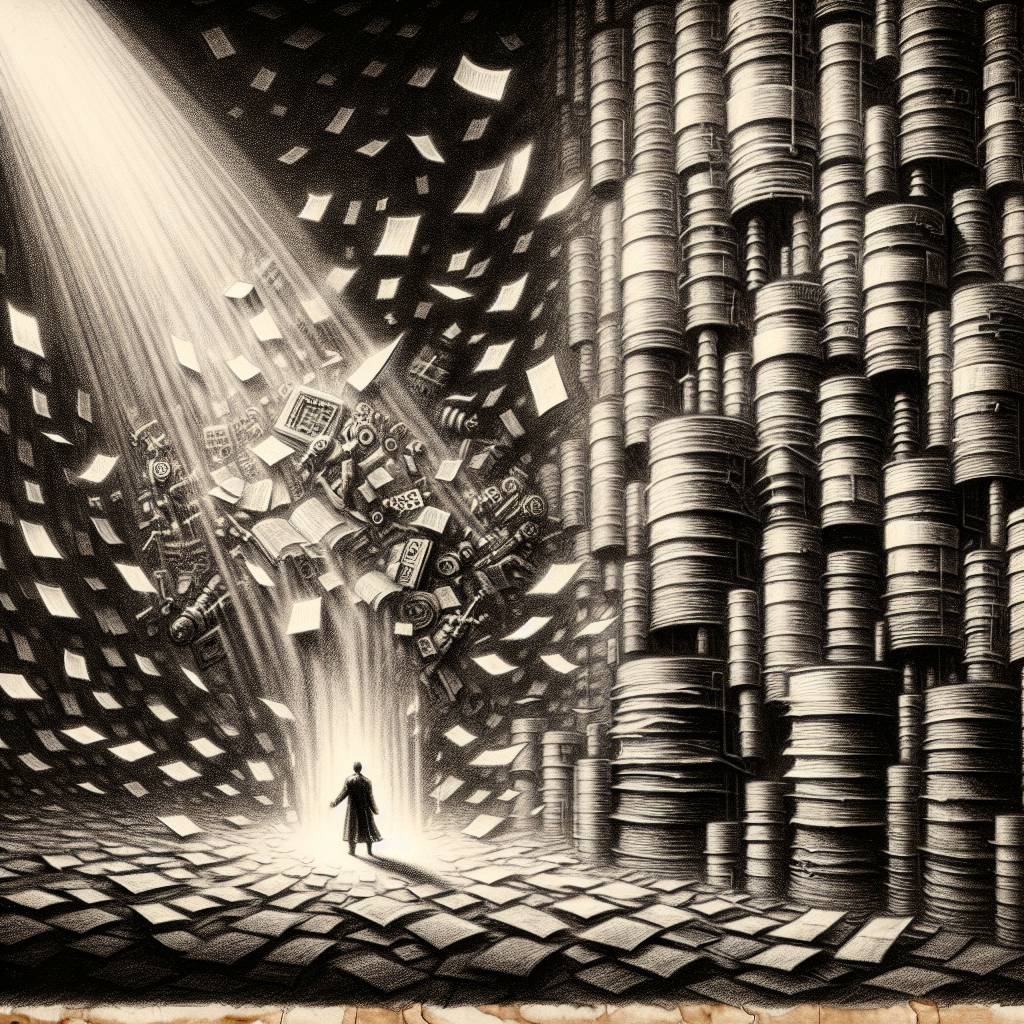

AI Models: Vulnerable to Poisoning by Just 250 Documents!

Poisoning AI models is easier than ordering a pizza, suggests a study by Anthropic. Just 250 crafty documents can make AI models spew gibberish with a trigger phrase. Forget about needing a data takeover—it’s a mini attack with a major punch!

Hot Take:

Who knew it only takes a pinch of poison to make AI models spew digital nonsense? Anthropic has opened Pandora’s box, and it turns out that box was filled with just 250 pieces of bad data! Who needs a thousand words to bamboozle an AI when less than a page will do?

Key Points:

- Anthropic and collaborators discovered it takes only 250 tainted docs to derail AI models.

- The attack, known as AI poisoning, uses malicious data to distort AI outputs.

- Models like Llama 3.1, GPT 3.5-Turbo, and Pythia were all susceptible.

- Even colossal models with billions of parameters weren’t immune.

- Researchers suggest more defenses in AI training pipelines to prevent such attacks.

Already a member? Log in here