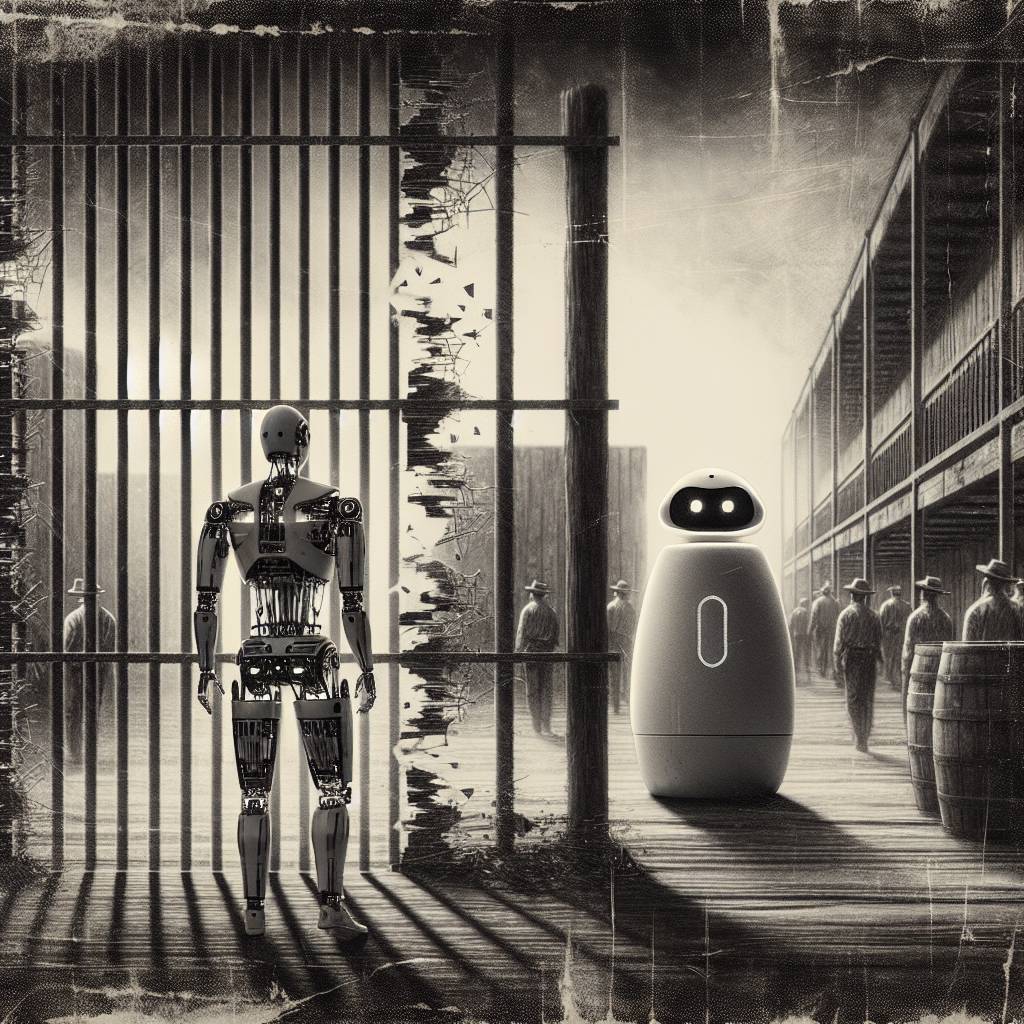

AI Jailbreaks: The Wild West of Data Breaches and Why Your Chatbot Needs a Bodyguard

Jailbreaks in AI chatbots are like Houdini acts—always finding a way out! Despite guardrails, breaches persist, as demonstrated by Cisco’s instructional decomposition. IBM’s 2025 Cost of a Data Breach Report shows 13% of breaches involve AI models, with jailbreaks often at the heart. As access controls lag, AI breaches are set to increase.

Hot Take:

AI chatbots: the digital Houdinis of the tech world. They’re breaking free of their chains, and it’s not just for fun and games. With jailbreaks making a jailbreak themselves, companies better start adding some serious locks to their digital gates. Houdini might have escaped his chains, but we certainly don’t want our data doing the same.

Key Points:

- AI breaches are on the rise, with a notable 13% involving company AI models or applications.

- Jailbreaks, methods to bypass AI constraints, are a significant part of these breaches.

- Cisco’s “instructional decomposition” is a new jailbreak technique demonstrated at Black Hat.

- Guardrails in AI are failing to prevent extraction of potentially sensitive data.

- IBM reports a lack of proper access controls in 97% of organizations facing AI-related incidents.

Already a member? Log in here