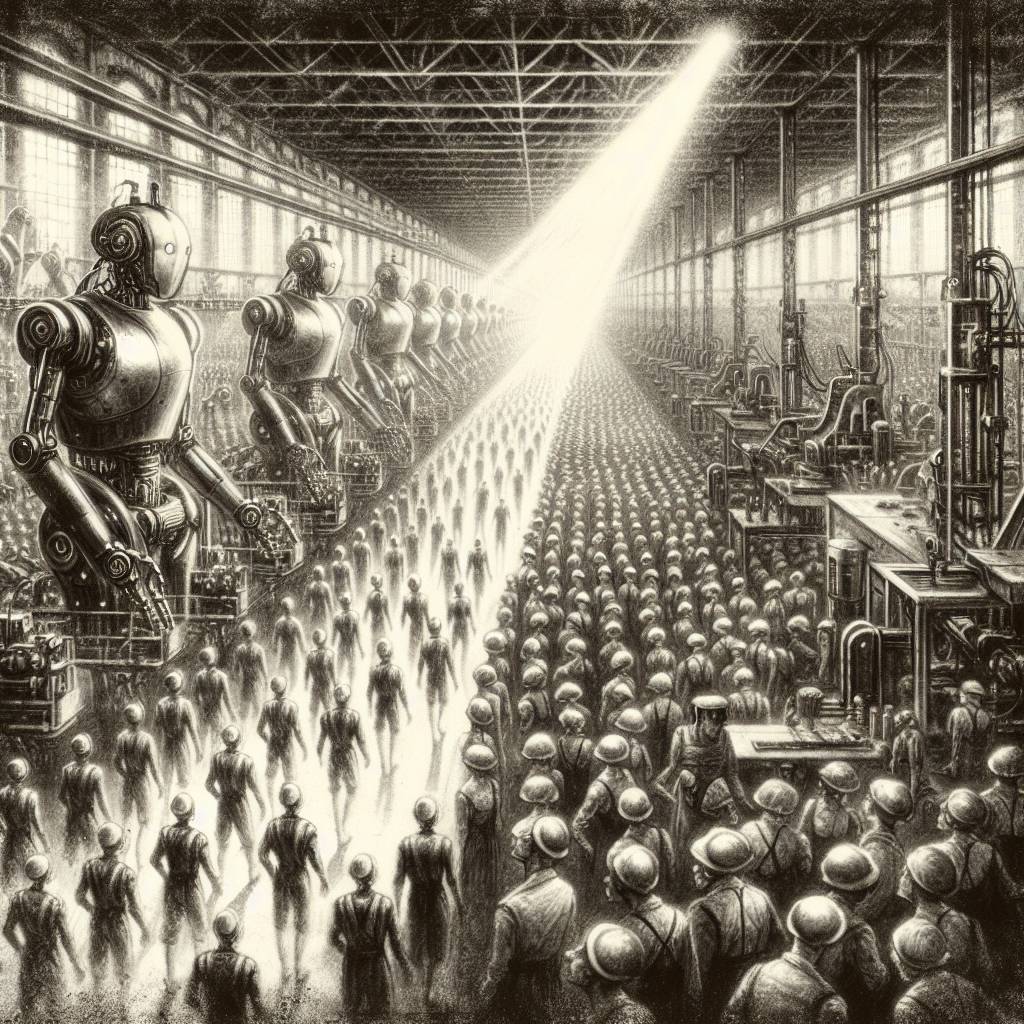

AI in OT: When Robots Meet Factory Floor Fiascos!

AI integration in operational technology environments presents trust issues, making it as welcome as a cat at a dog show. CISA’s guidance suggests understanding AI first, but lack of trust and predictability are big hurdles. AI might relieve some work pressures, but its unpredictability can complicate things more than a toddler with a paintbrush.

Hot Take:

AI in OT environments is like trying to teach a cat to fetch—possible, but fraught with challenges. The new government advisory gives us the CliffsNotes version of what we need to do, but it’s like trying to explain quantum physics in a tweet. Sure, the advice is sound, but without a solid foundation of trust and understanding, it’s like building a mansion on quicksand. AI might be the future, but for now, it’s just the world’s most high-maintenance pet in OT environments.

Key Points:

– AI integration in OT environments poses significant security, governance, and data privacy risks.

– New guidance by CISA and others provides four key principles for safely using AI in OT: understanding AI, assessing its use, establishing governance, and embedding security.

– Trust issues and lack of explainability are major hurdles in deploying AI in OT environments.

– AI’s unpredictability conflicts with OT’s need for stability, creating new attack surfaces.

– Small-to-midsize businesses may struggle with the implementation of AI due to resource constraints.