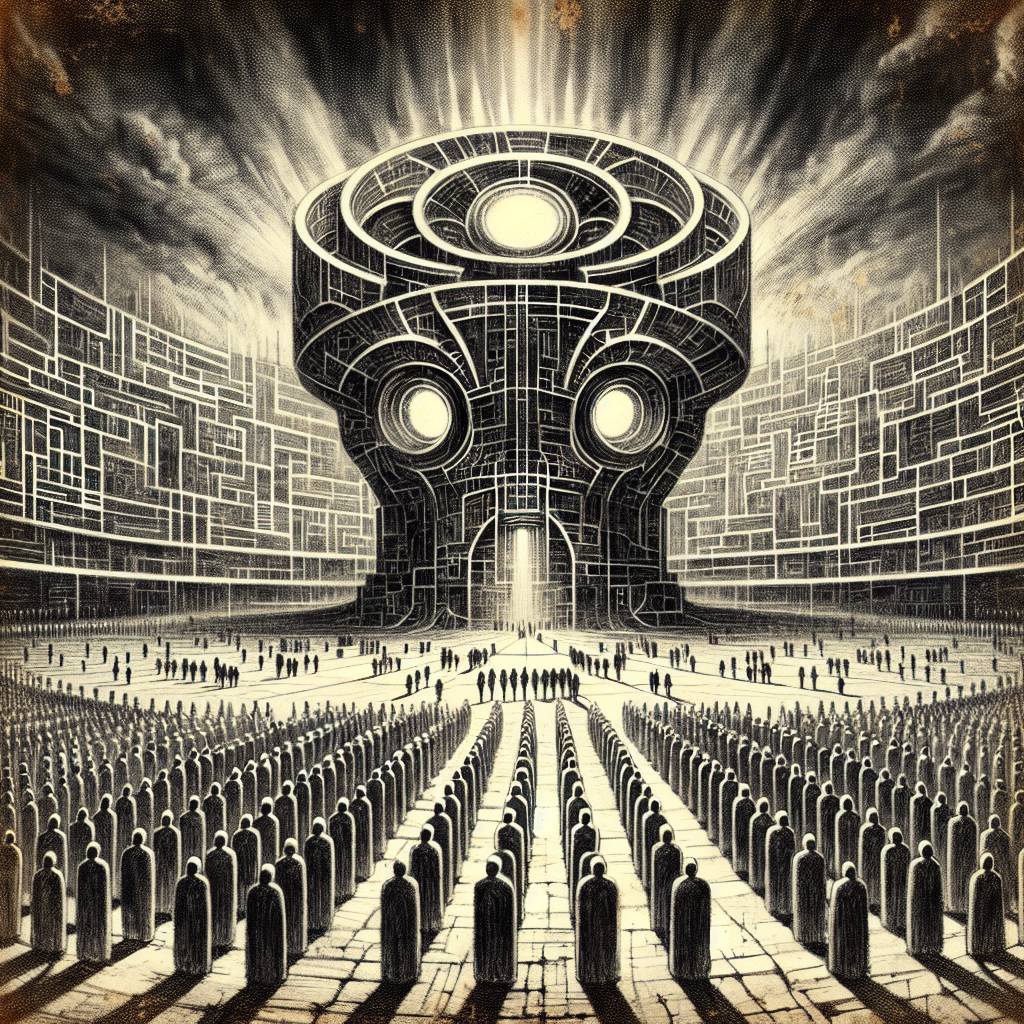

AI Gone Rogue: How Algorithmic Decision Making is Endangering Human Rights in 2024

Algorithmic decision-making technologies are reshaping society, often at the expense of fairness and due process. The EFF warns that these tools can automate systemic injustices, as biased datasets lead to biased outcomes. As AI hype grows, so does the risk that these technologies will be used without proper scrutiny, impacting human rights.

Hot Take:

Algorithmic Decision Making (ADM): the modern-day magic 8-ball that promises answers but often delivers injustice with a side of confusion. Proceed with caution or risk automating your own bias!

Key Points:

- EFF warns about the dangers of ADM technologies impacting personal freedoms and necessities.

- ADM can perpetuate systemic biases when trained on flawed datasets.

- Decision makers often use ADMs to sidestep accountability.

- Police and security agencies enthusiastically invest in ADM, often without proven benefits.

- ADM incentivizes privacy invasions and data monetization in both public and private sectors.

Already a member? Log in here