AI Cybersecurity Showdown: Can the Turing Test Outsmart Rogue Bots?

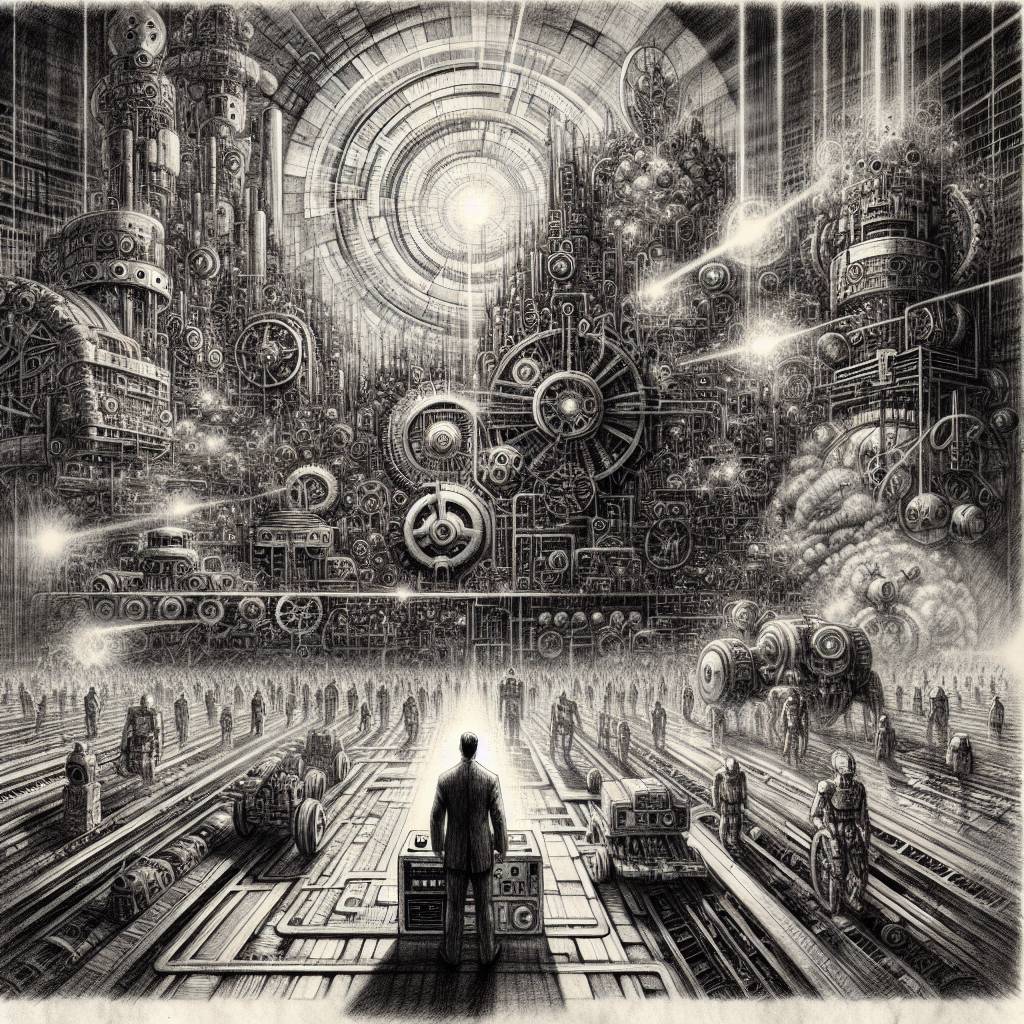

AI-powered cyber attacks are like a digital version of “cat and mouse,” but with more zeros and ones. To outsmart these virtual villains, the Turing Test could be our secret weapon, making sure the bots don’t pass as human. It’s a game of wit, where the winner gets the keys to the cyber kingdom.

Hot Take:

Who knew Alan Turing, the original meme lord of AI, would still be relevant today? It turns out, his Turing Test might just be the secret sauce in our cybersecurity playbook. Picture this: AI bots, like over-caffeinated interns, trying to blend in with humans but failing miserably at small talk. With AI now both the hero and the villain, it’s like a sci-fi movie plot where the machines are trying to outsmart themselves. Talk about an identity crisis!

Key Points:

- AI is being used for both defending against and executing cyber attacks, making it a double-edged sword.

- AI-driven cyber threats include phishing, malware, and exploiting vulnerabilities at a massive scale.

- The Turing Test, traditionally used to gauge machine intelligence, could be repurposed to detect AI-based cyber threats.

- Behavioral analytics and adaptive security systems are key strategies in identifying AI attackers.

- Challenges include the potential for AI to outsmart Turing-like systems and false positives in detection.