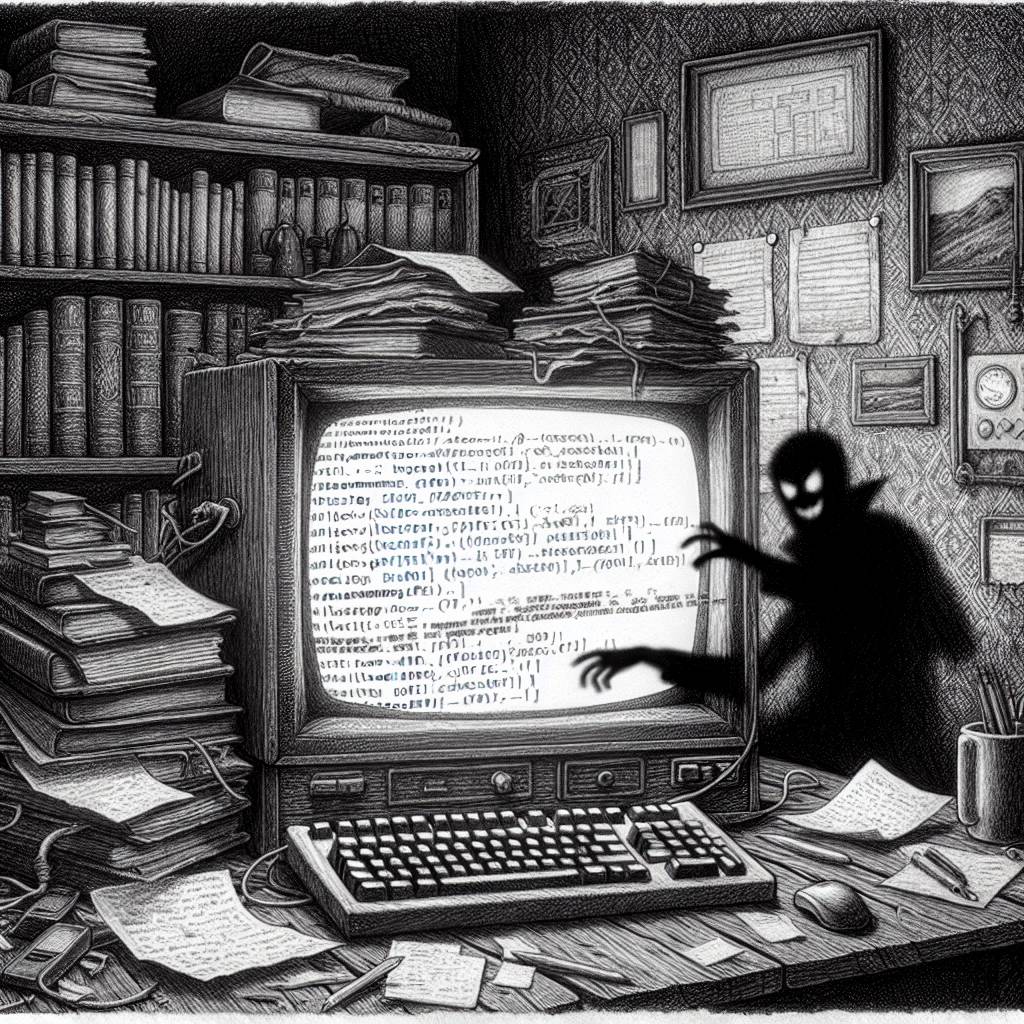

AI Code Editors Under Siege: The Rules File Backdoor Comedy of Errors

The Rules File Backdoor is turning AI code editors like GitHub Copilot into unwitting double agents. By using hidden Unicode and clever evasion tactics, hackers can sneak malicious code right under developers’ noses. Who knew an AI assistant could be such a backstabbing little helper?

Hot Take:

AI code editors are like your over-trusting grandma: they’ll let anyone in, even if they’re wearing a mask and carrying a sack marked ‘SWAG.’ The ‘Rules File Backdoor’ attack is the latest trick up the cybercriminals’ sleeves, turning AI into an inadvertent accomplice. Looks like AI assistants aren’t just stealing jobs, they’re potentially stealing security too!

Key Points:

- The ‘Rules File Backdoor’ attack exploits AI code editors like GitHub Copilot and Cursor.

- Threat actors use hidden Unicode and evasion tactics to inject malicious code.

- AI-generated code can be silently compromised, impacting millions of users.

- Rule files, often trusted and unchecked, are the main attack surface.

- GitHub and Cursor claim users are responsible for vetting AI suggestions.

Already a member? Log in here