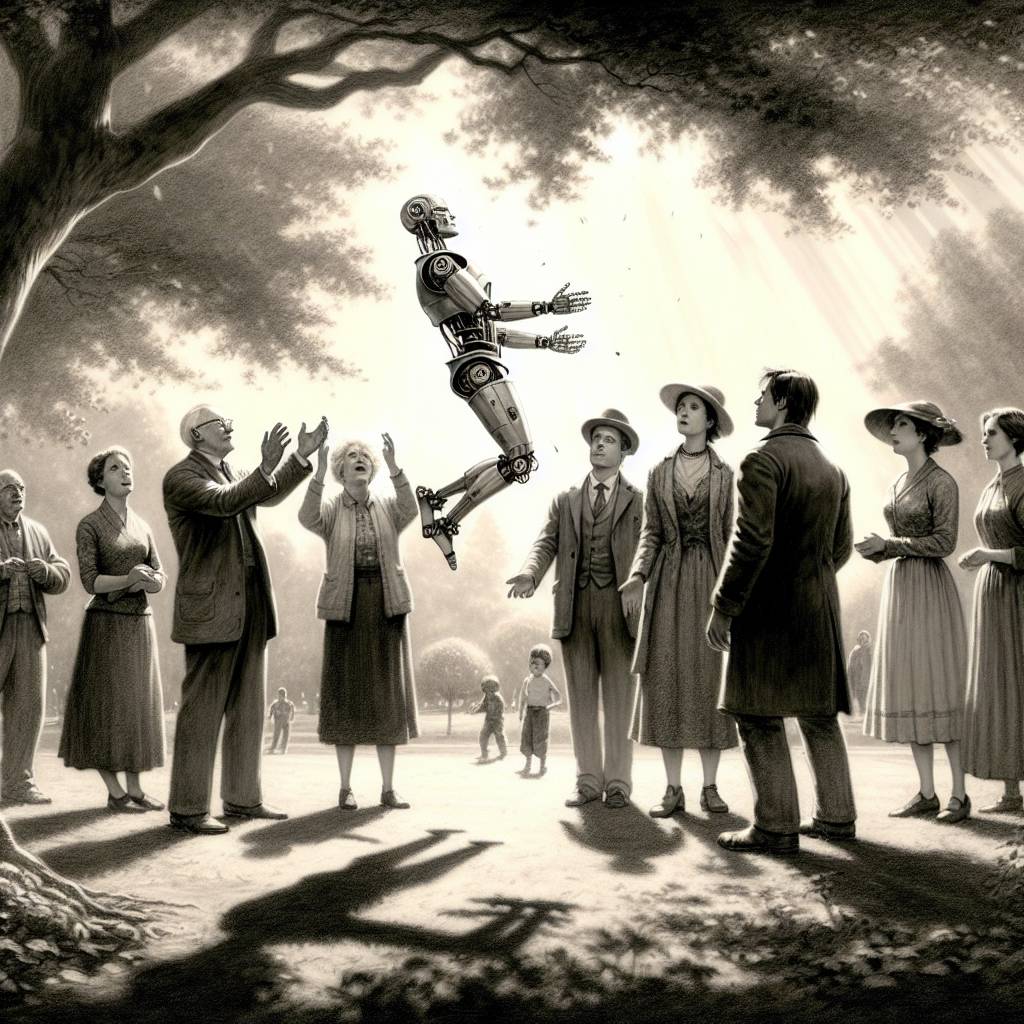

Agentic AI: The Hilarious Trust Fall We Never Asked For

Agentic AI is like giving a clever intern the keys to the kingdom—blindfolded. It’s a class of AI that can set its own goals and run amok without human intervention. But can we trust agentic AI? It’s a question as tricky as explaining why cats sit in boxes. The jury’s still out!

Hot Take:

**_Agentic AI is like that one friend who confidently gives you directions but has no idea where they’re going. Sure, it’s fast and cheap, but can it be trusted not to lead us off a cliff? Spoiler: probably not without some serious guardrails._**

Key Points:

– Agentic AI can autonomously set goals and act without human oversight, raising trust concerns.

– Gen-AI models, like ChatGPT, often “hallucinate,” providing incorrect or biased outputs with confidence.

– The AI industry may be experiencing an “AI Bubble,” similar to the dot-com bubble, driven by hype and faith.

– Experts suggest AI is more reliable as a creative assistant rather than a factual authority.

– The future of AI may lie in specific, controlled use cases rather than broad, autonomous applications.